research

overview of my research and selected projects

NEURO-SYMBOLIC AI

Modeling and control synthesis for long-horizon tasks using Temporal Behavior Trees

This work addresses the limitations of long-horizon, myopic reinforcement learning by combining the adaptability of Behavior Trees (BTs) with the formal rigor of temporal logics. While temporal logics enable precise task specification, they struggle with complex dependencies. BTs offer modularity and flexibility but lack formal guarantees. By translating BTs into Timed Automata, this approach enables formal verification, inconsistency detection, and control synthesis using UPPAAL, ensuring robust, time-aware task execution (Matheu et al., 2025); (Matheu et al., 2025).

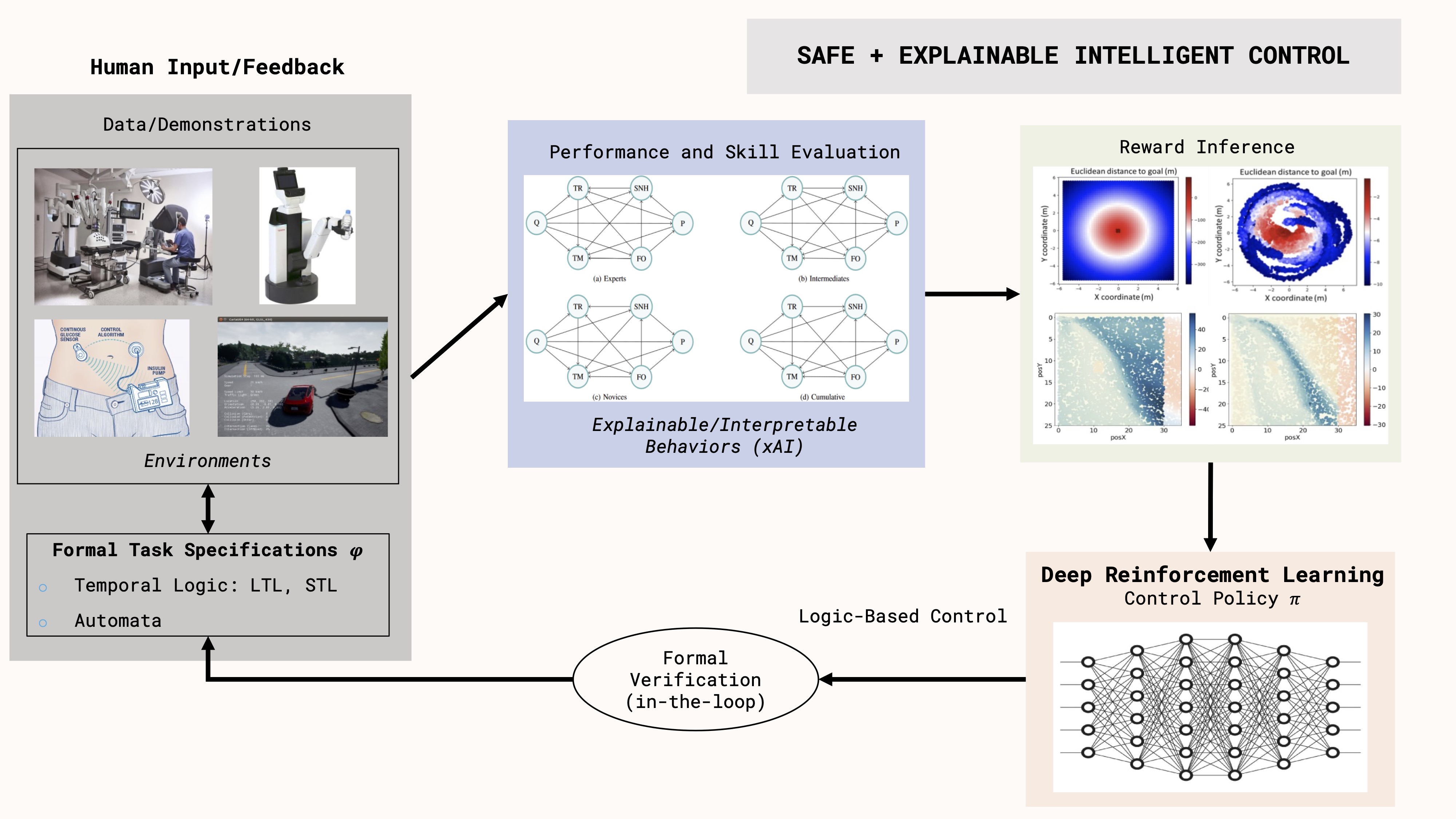

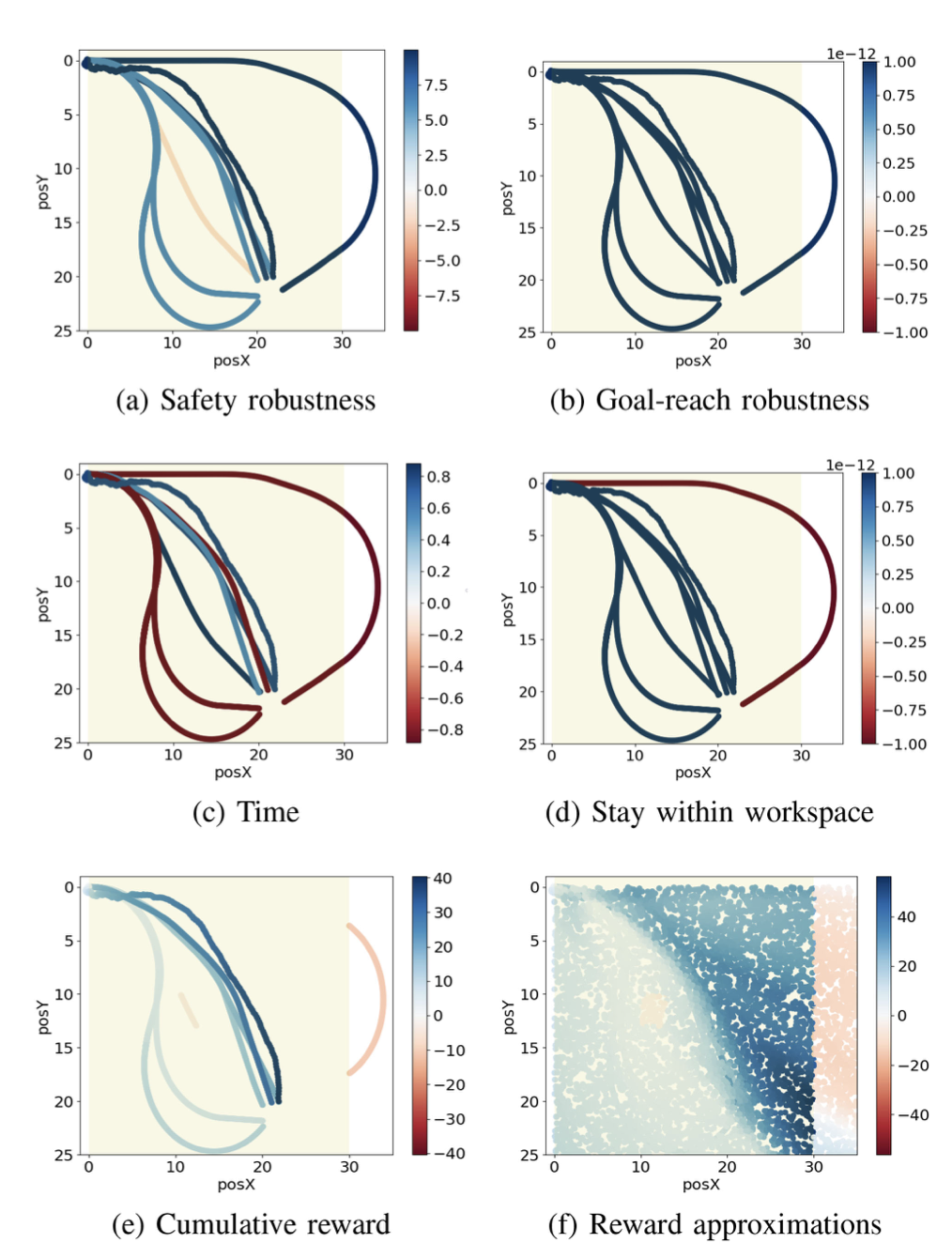

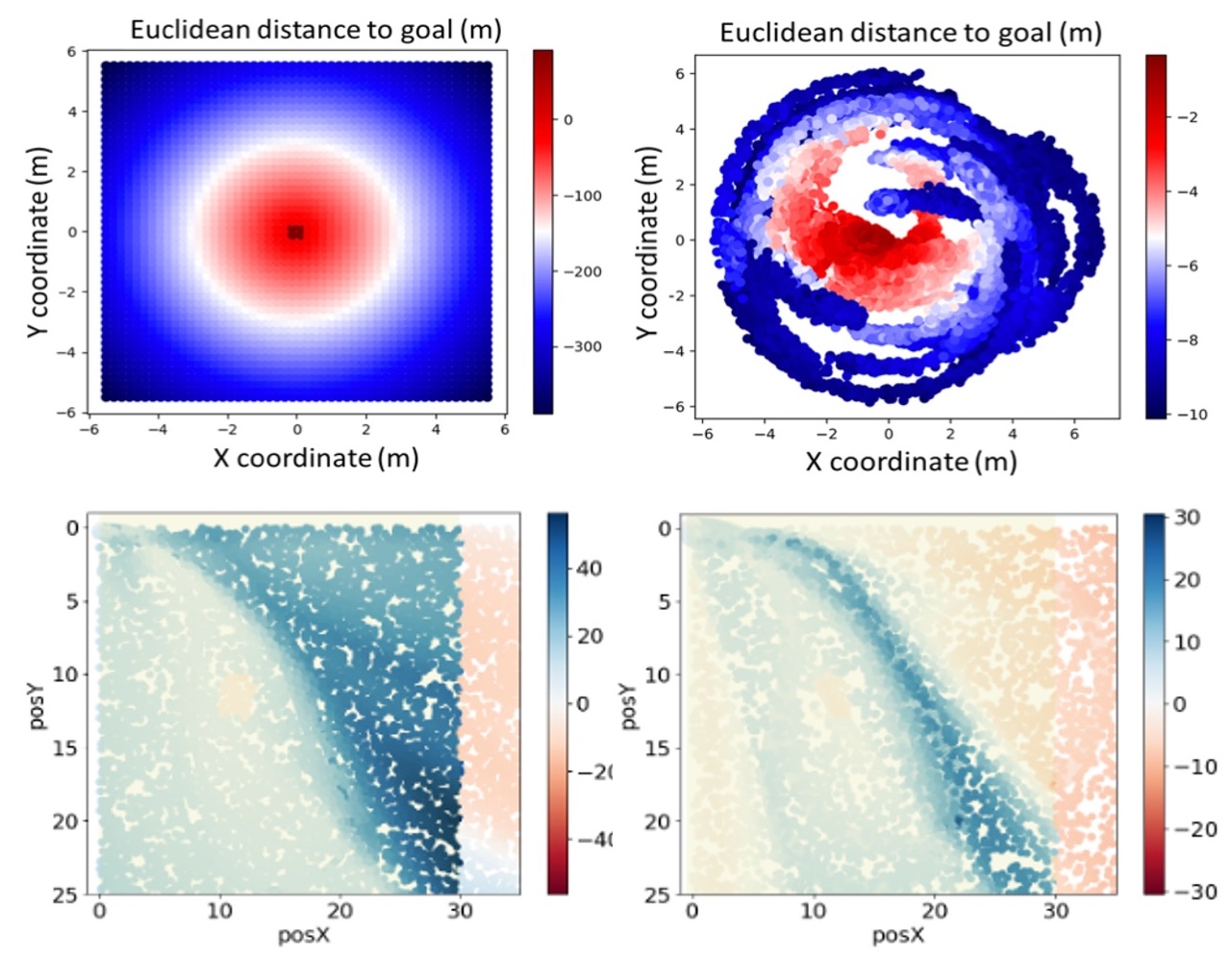

Learning reward functions and control policies that satisfy temporal-logic specifications

Designing effective reward functions in reinforcement learning (RL) is challenging and error-prone, often leading to unsafe behaviors. This work presents a neurosymbolic learning-from-demonstrations (LfD) framework that leverages Signal Temporal Logic (STL) and user demonstrations to derive temporal reward functions and control policies. Unlike traditional inverse RL, this approach captures non-Markovian rewards and enhances safety and performance (Puranic et al., 2021). Initially developed for discrete environments, the framework was later extended to continuous and stochastic settings using Gaussian Processes and neural networks (Puranic et al., 2021); (Puranic et al., 2023).

Learning to improve/extrapolate beyond demonstrator performance

Machine learning performance relies heavily on data quality and quantity, making noisy or limited demonstrations a challenge in robotics. This work proposes neuro-symbolic apprenticeship learning, using temporal logic-guided reinforcement learning to enable robots to self-monitor and adapt for improved safety and performance. It integrates logic-enforced curriculum learning for long-horizon tasks. The capabilities of the framework are exhibited on a variety of mobile navigation, fixed-base manipulation and mobile-manipulation tasks using the Nvidia Isaac simulator (Puranic et al., 2023); (Puranic et al., 2024). We further introduce a new algorithm that uses Conditional Value-at-Risk (CVaR) within a quantile-based RL framework to mitigate overestimation bias and improve safety (Enwerem et al., 2025).

INTERPRETABLE/EXPLAINABLE AI (xAI)

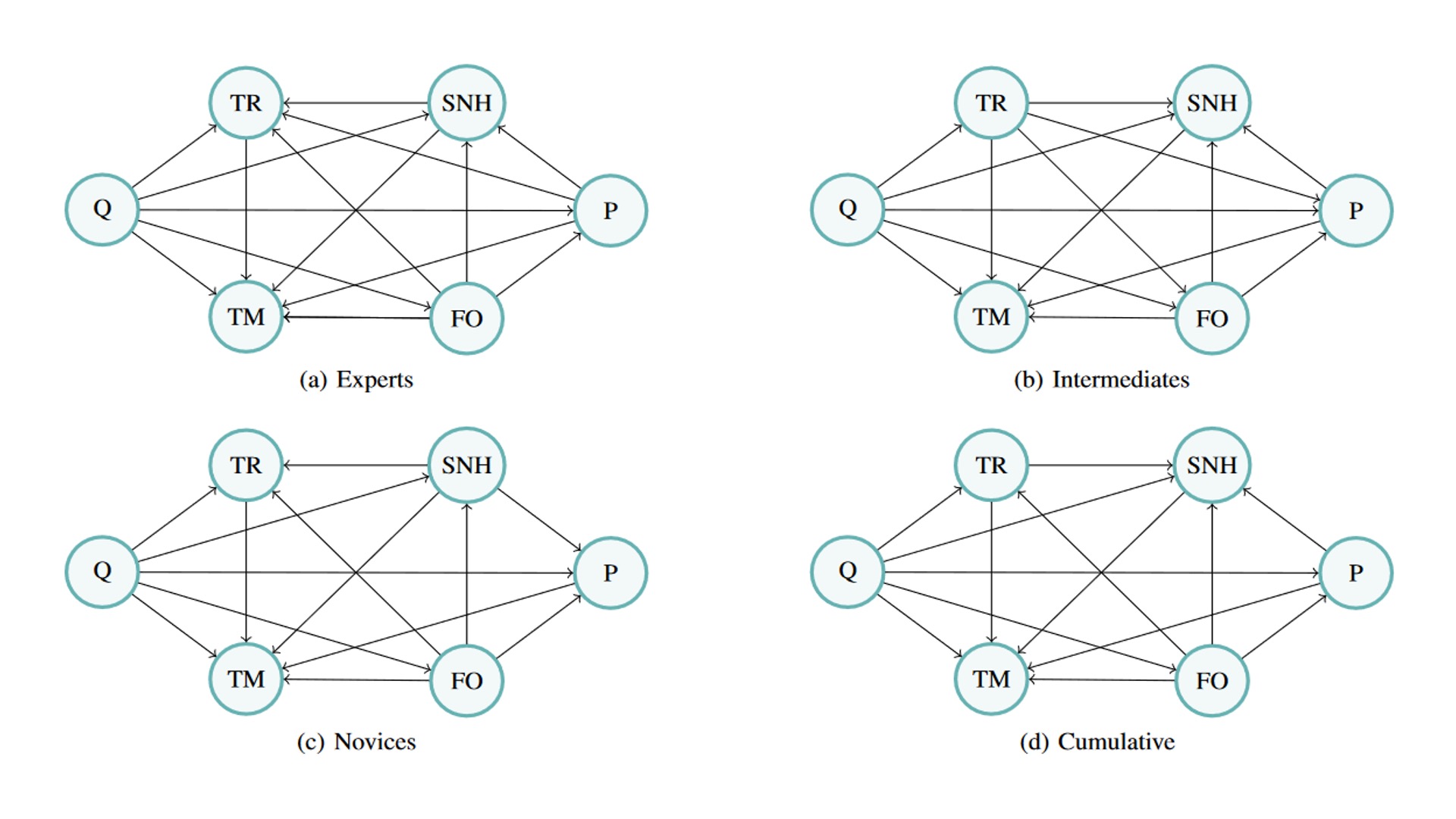

Generating explainable temporal logic graphs from human data

Evaluating human demonstrations is key to learning safe, effective robot policies. Prior LfD-STL methods required manual ranking of STL specifications, represented as a DAG. To reduce this burden, we introduce Performance Graph Learning (PeGLearn), which automatically infers the DAG from demonstrations. PeGLearn enhances explainability, validated via a CARLA driving study, and models surgeon behavior using human feedback in a surgical domain (Puranic et al., 2023).

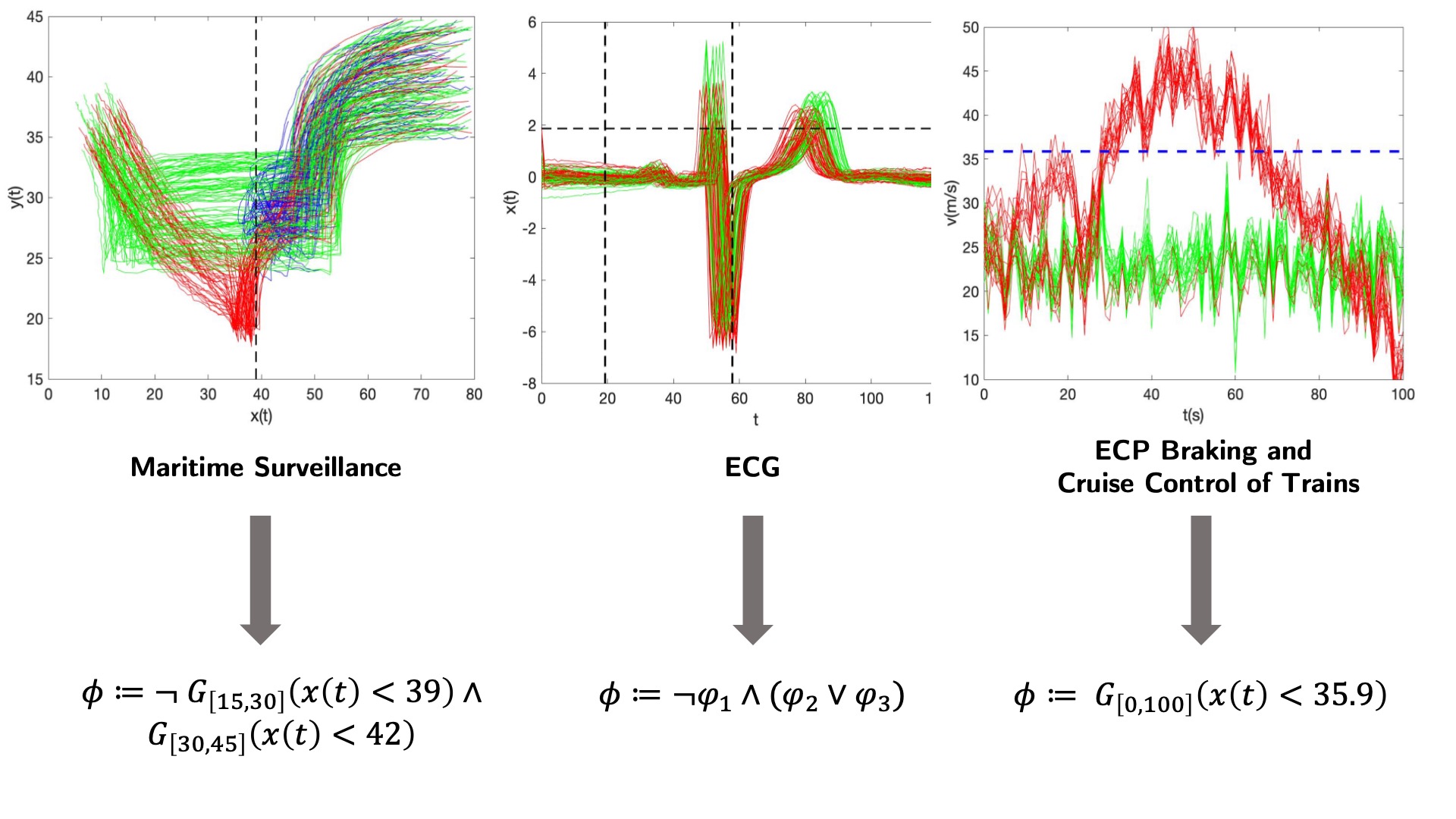

Learning (mining) specifications from temporal data

Autonomous cyber-physical systems like self-driving cars, UAVs, and medical devices present significant challenges in understanding high-level behaviors of their heterogeneous components, particularly those equipped with deep learning. This work addresses two key problems:

-

Determining what environmental assumptions guarantee that system outputs satisfy given requirements using techniques like decision-tree classifiers, counterexample-guided learning, and parameter mining to extract STL specifications that explain system behaviors (Mohammadinejad et al., 2020); (Mohammadinejad et al., 2020).

-

Developing robust real-time anomaly detection for time-critical applications through a novel framework that combines autoencoders for latent data representation with threshold-augmented discriminator networks, while integrating STL inference to provide human-understandable explanations for deep learning decisions. Validated on real-world data from a Clearpath Husky mobile robot, our approach demonstrates high anomaly-detection accuracy, actionable insights for diagnosing failures, and deployable real-time detection capabilities (Noorani et al., 2026).

COMPUTER VISION

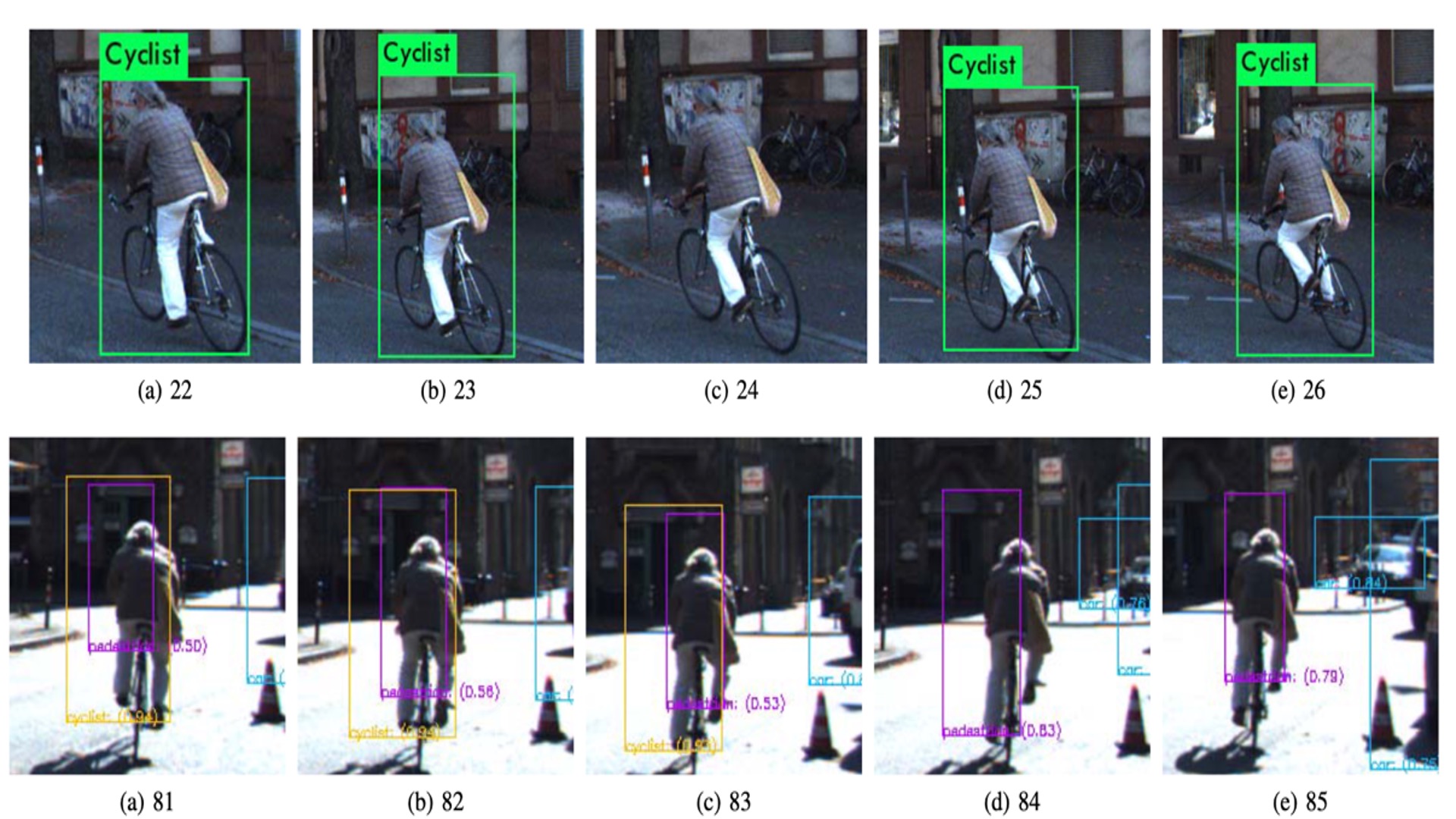

Evaluating the quality of vision-based perception algorithms

Computer vision is vital for cyber-physical systems, but ensuring the robustness of deep learning-based perception is challenging. Traditional testing relies on ground truth labels, which are labor-intensive to obtain. This work introduces Timed Quality Temporal Logic (TQTL) to formally specify spatio-temporal properties of perception algorithms, enabling evaluation without ground truth (Balakrishnan et al., 2019).

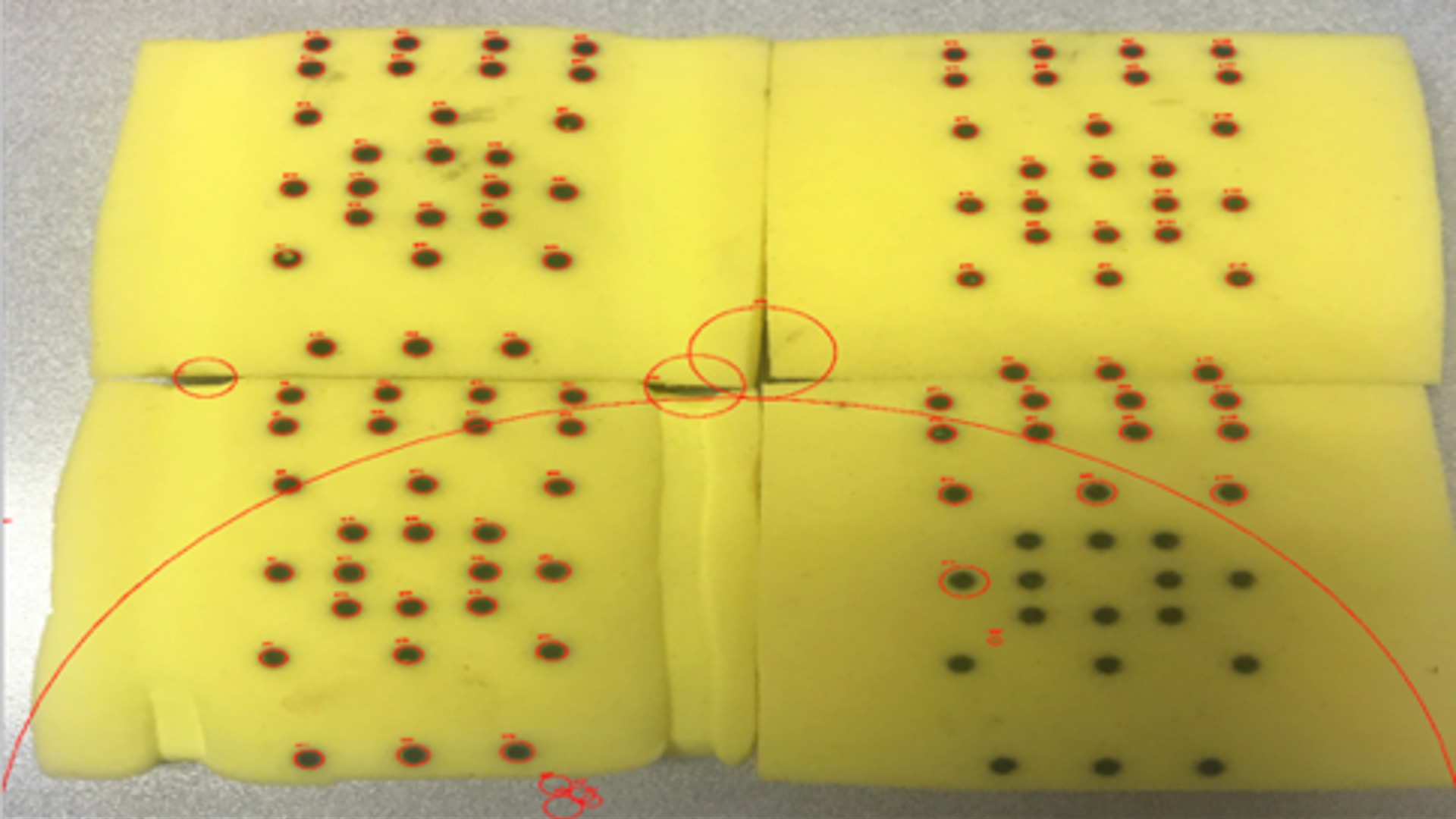

Vision-based metric for evaluating surgeon’s performance

Due to the lack of instrument force feedback during robot-assisted surgery, tissue-handling technique is an important aspect of surgical performance to assess. We develop a vision-based machine learning algorithm for object detection and distance prediction to measure needle entry point deviation in tissue during robotic suturing as a proxy for tissue trauma (Puranic et al., 2019).